Ever wondered if AI can be taught not just to know things, but also to be… well, less annoying? Lets talk about RLHF …

When you’re training a generative AI model, you want it to produce useful, insightful, and non-disastrous outputs. Reinforcement Learning from Human Feedback (RLHF) is like teaching your AI how to behave through a mix of praise, constructive criticism, and the occasional facepalm.

In RLHF, humans rank or score the AI’s outputs. The AI then adjusts itself, learning from these scores to better understand what humans actually like. It’s like when your boss gives you feedback, but imagine your boss is millions of people on the internet giving it a thumbs-up or thumbs-down. No pressure, right?

This process is typically carried out by foundational model building organizations—those developing the large-scale models that serve as the backbone for many AI applications. These organizations use RLHF to fine-tune their models, ensuring they align better with human expectations and preferences.

Why is RLHF important? Without it, AI models can get stuck in “technically correct but awkward” territory. With RLHF, models learn to generate responses that are not just factually right, but also contextually appropriate—less of the cringe and more of the “okay, that’s actually useful.”

There are different types of feedback that can be used in RLHF: explicit feedback, like direct ratings from humans, and implicit feedback, such as observing user behavior to infer preferences. Both types play a role in shaping how the model learns and adapts, making it more capable of understanding the subtleties of human communication.

But it ain’t easy. Challenges include keeping bias out of the feedback loop, ensuring consistent quality, and the fact that “what people like” can be… a moving target (because, well, humans are complicated).

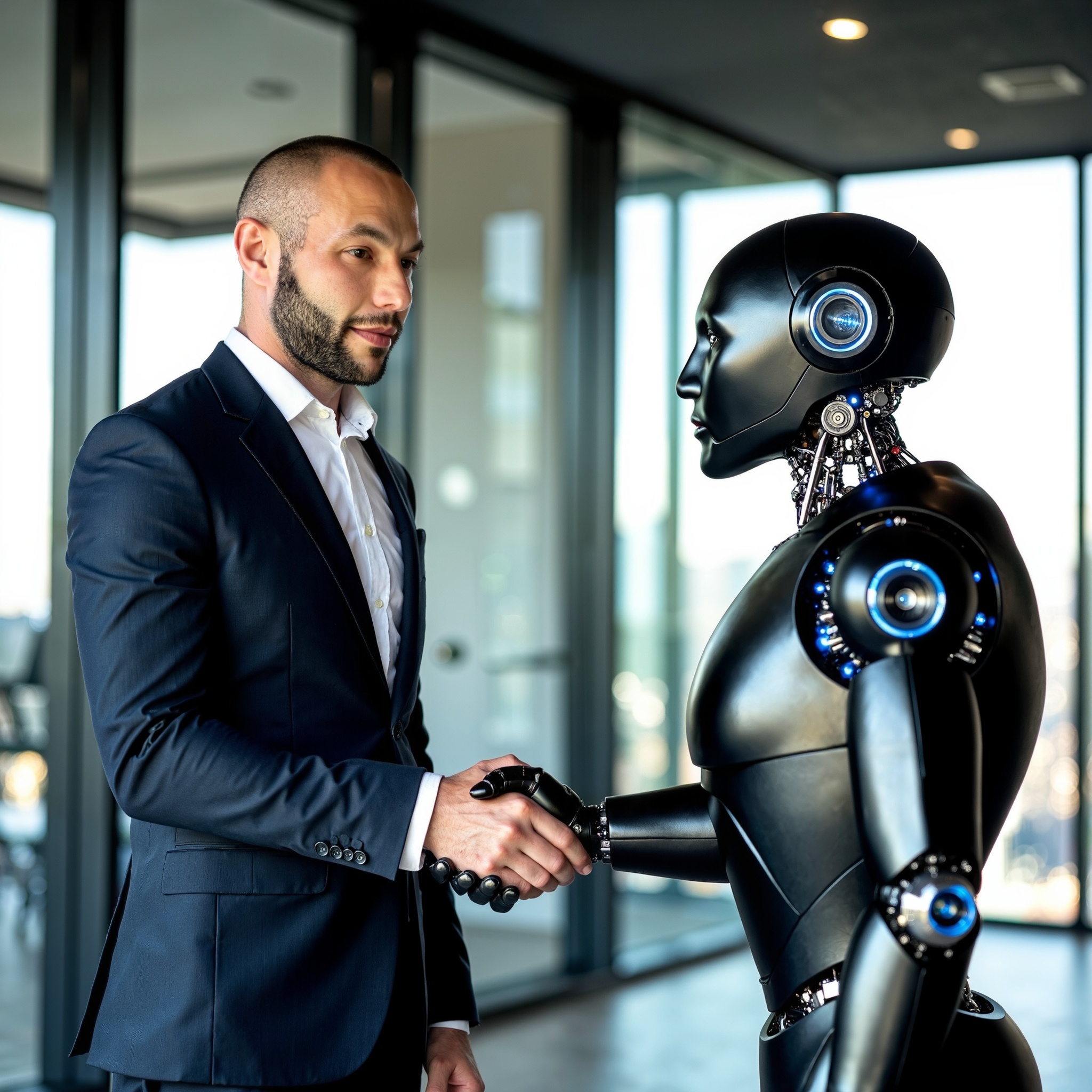

RLHF is one of the key ways we try to make AI a little more like a wise friend and a little less like a frustrating robot. It’s an ongoing dance between human preference and machine learning, and sometimes, you just have to teach the AI that “no, sarcasm is not always the answer.”