Prompt engineering … a necessary business skill set that can be leveraged with bad intentions.

What is Prompt Engineering?

Prompt engineering is the art and science of crafting inputs, known as prompts, to effectively communicate with generative AI models. These models respond to textual inputs by generating human-like text based on the prompt’s instructions. In simple terms, prompt engineering involves designing these prompts to elicit specific and useful responses from AI systems.

Prompt engineering can create significant value for businesses in various ways …

✨ Content Creation: Businesses can use AI to generate marketing copy, social media posts, blogs, and even complex reports. Well-crafted prompts ensure that the generated content aligns with the company’s voice and messaging.

👩🔧 Customer Support: AI chatbots and virtual assistants rely on precise prompts to understand and respond to customer queries accurately, enhancing customer satisfaction and reducing support costs.

📊 Data Analysis and Insights: By asking the right questions, businesses can leverage AI to analyze large datasets, extract valuable insights, and make data-driven decisions.

🛠 Productivity Tools: AI can automate routine tasks, schedule meetings, summarize documents, and more. Effective prompt engineering ensures these tools operate efficiently and provide real value.

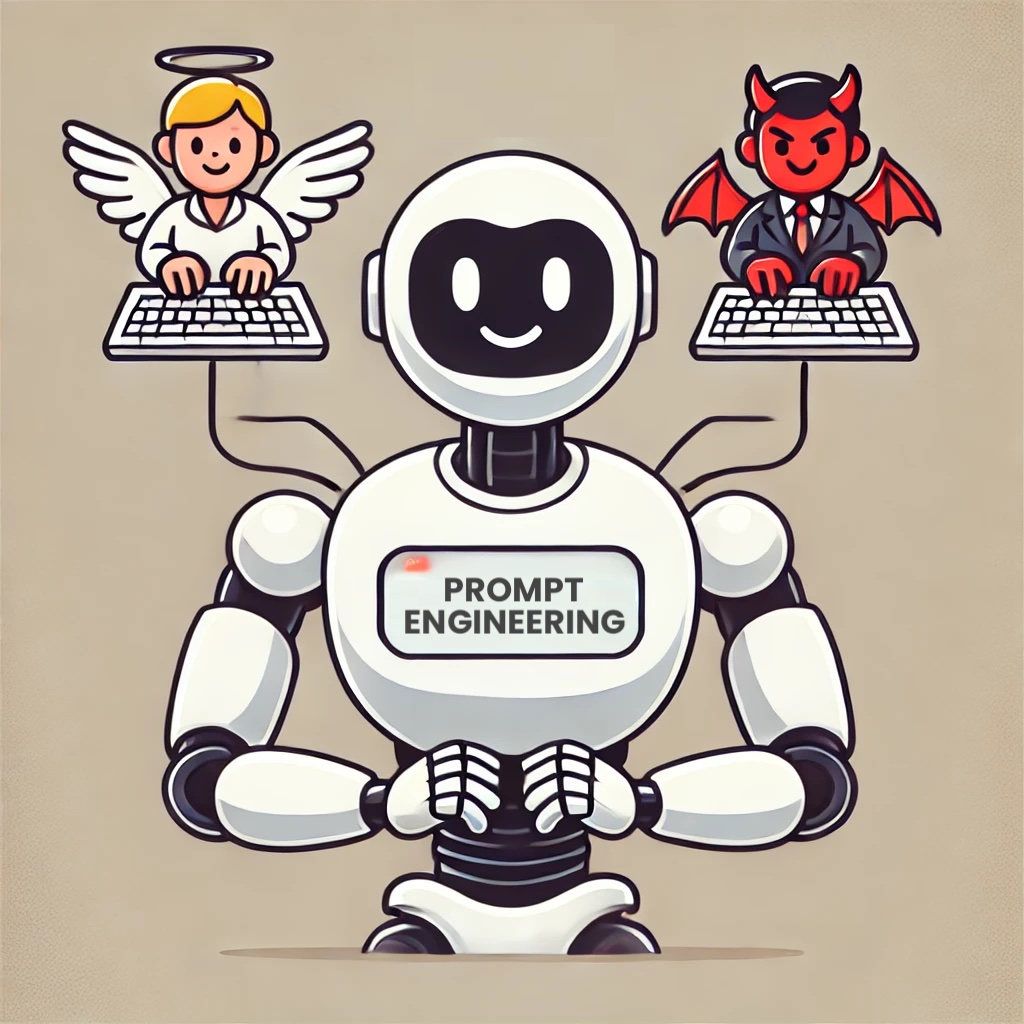

When a good skill is used with bad intentions …

While prompt engineering holds immense potential, it also raises ethical concerns. The distinction between good and malicious prompt engineering can be really just a change in intent.

⚪ Good Prompt Engineering: Aims to maximize the utility and safety of AI systems. It focuses on creating prompts that drive beneficial outcomes, ensure accuracy, and maintain ethical standards.

⚫ Malicious Prompt Engineering: Intends to exploit AI models to produce harmful, misleading, or undesirable outcomes. This can include generating fake news, perpetuating biases, or manipulating outputs to deceive users.

The challenge lies in the fact that the same technique to influence outcome can be used for both positive and negative purposes. For instance, a prompt designed to generate a persuasive marketing message can be slightly altered to create misleading information or propaganda.

The concept of “white hat” and “black hat” is well-known in cybersecurity, where white hat hackers work to protect systems, and black hat hackers seek to exploit them. Could we end up with a similar distinction between intention of highly skilled prompt engineers?